AI Camera-based driving analysis tool

Machine Learning

Computer Vision

Backend

Data Analysis

- SparkSense is a Computer Vision-oriented extension of our proprietary telematics solution.

- The system's core functionality is real-time image processing supported by complex algorithms to detect hazardous behavior on the road.

- Our ML models are adjusted to operate on the edge device.

- The National Center of Research and Development awarded us a scientific grant to support product development.

Does every slam on the brakes means that the driver is being reckless? Is the sudden change of direction a result of a lack of attention? Or maybe both these situations are life-saving maneuvers?

Current telematics solutions offered in UBI (Usage-Based Insurance) and fleet management systems can't tell the difference, as such situations are indistinguishable without more data.

We wanted to tackle this and many similar problems in our proprietary telematics solution - SparkT - by augmenting it with an intelligent camera module that recognizes such types of situations. The National Center of Research and Development awarded us with a scientific grant to support the development of the system.

Context is crucial

The ultimate goal of the project was to develop a system to analyze the user’s driving style in terms of safety, fluidity, and economy. The core functionalities of the system are to:

- Recognize and video-register potentially dangerous maneuvers,

- Augment the accelerometer and GPS data with cartographical information,

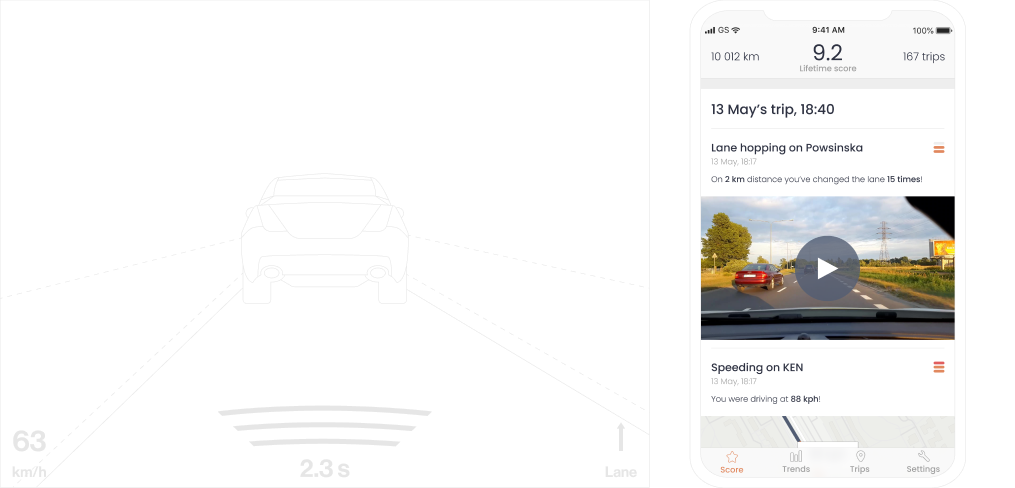

- Score each user’s trip using the collected and processed multi-parameter data,

The project involved building a monitoring device, a "smart camera", and equipping it with a machine learning engine to elevate the solution. It had to be nimble enough to carry all the operations on the edge device but still provide the demanded accuracy level.

Augmenting our SparkT system with ML-based video recognition features was the last step for our application to become a first-on-the-market fully contextual driving analysis tool.

Edge Video Recognition

We carried out extensive research to define the most common causes of road accidents and ways to address them in our system architecture.

Dangerous road events are mostly caused by:

- Excessive speed

- Non-compliance with the right of way

- Tailgating

- Unwary maneuvers within zebra crossing

- Non-compliance with traffic signs

We developed a complex system that combined ML-powered video analysis with information gathered from accelerometer and GPS. Our solution detects all the previously mentioned events by performing multi-sensorial time-series data analysis.

The goal was to record each potentially dangerous maneuver automatically, as they’d be essential factors affecting the driver’s score. From the insurance company's perspective, it’s crucial to calculate the precise risk of the driver’s dangerous behavior, therefore the analysis should be detailed. For the driver, we wanted to provide visual feedback, which is a key feature to understand where he made a mistake and what the situation was. This way positively influencing driving habits is greatly facilitated, as the presented information is contextual and personalized.

The Machine Learning heart

Building such a multi-featured system while preventing the system’s complexity from overloading the edge device processor was possible due to our telematics experience and many experiments. We decided to build our camera with off-the-shelf components such as TPU processors, to lower the individual cost of a single device, making the solution more ubiquitous.

For the ML modules we tested lots of techniques, and finally chose:

- deep learning methods with convolutional and recurrent neural networks for our video assessment,

- random forest and statistical models to enrich data with contextual cartographical data (e.g., defining road type based on near settlements, speed bumps, or city border outlines),

- heuristics, clusterization, and random forest to define algorithms for hazardous event detection and add their outcomes to the final trip evaluation.

According to Allied Market Research studies, the most rapid growth will pertain to highly advanced, multi-parameter systems in the telematics area. We wanted to fulfill this prediction by building a system that will recognize many types of events and behaviors that are not yet addressed in other solutions on the market. This way our proprietary telematics technology gains a competitive advantage with the broadest, most precise driving style assessment.

Innovation incoming

We’re proud to say that our ML-powered extension to the SparkT system is ready and operating! The prototype of a smart camera was designed and built by hand by one of our senior software engineers. To make sure it fulfills all the scientific grant requirements that we set before starting the R&D, it had to be tested thoroughly.

We did performance and load tests and learned some important lessons in the process:

- Performance tests mirrored the traffic of 2240 simultaneous users at regular and 8000 in a peak, with all the data transfer from the smartphone and the smart camera that they would generate.

- We had to optimize some of the algorithms, (e.g., the snap-to-road algorithm we designed for the system to recognize trip routes based on GPS position and compare driver speed with speed limits) to make the solution better suited to operations performed in cloud.

- We had to switch our initial cloud vendor to optimize storage and request costs. Batching visual events upload and using pre-signed URLs to bypass our API allowed us to cut the cost by 90%.

We’re now field-testing the device to hyper-tune some hardware parameters. This case study is soon to be updated!